RIT Research Computing supplies advanced research technology resources and support to researchers in their quest to discover.

On this site you will find a list of scholarly publications that utilized RIT Research Computing services, including the High-Performance Computing (HPC) cluster, Spack software packages/environments, Ceph storage, file shares, virtual machines, REDCap (https://redcap.rc.rit.edu/), Mirrors (https://mirrors.rit.edu/), GitLab (https://git.rc.rit.edu/ – formerly https://kgcoe-git.rit.edu), and consultation (debugging, workflow optimization, grant proposals, data management plans).

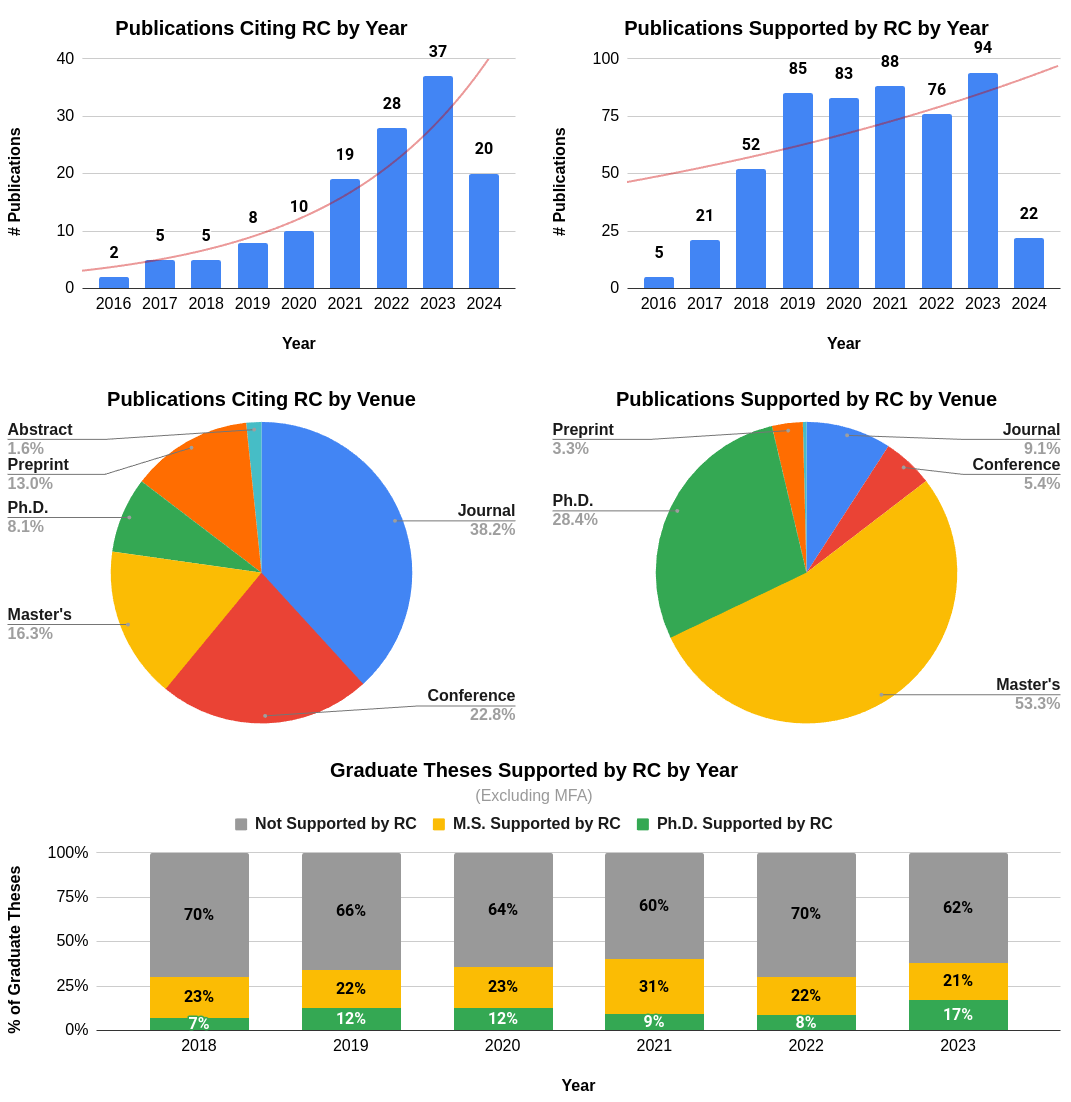

Statistics

Since 2016, RIT researchers have published at least 526 scholarly works with support from Research Computing. The uptick in usage in 2019 correlates with the execution of the 2018 Research Computing Services Proposal, notably the installation of the current HPC cluster, SPORC, in late 2018.

Notes:

- Publications are reported by calendar year, not fiscal or academic year

- Master’s Thesis data for 2016-2017 is still being processed

Data Sources

This data was collected from multiple sources:

- Graduate Theses published on repository.rit.edu and/or ProQuest cross-referenced with internal logs of Cluster, Spack, Storage, Virtual Machine, GitLab, REDCap, and Mirrors usage

- Publications that cite Research Computing’s DOI number

- Search queries run against Google Scholar

- Publications self-reported by RIT researchers using this form

Citation & Acknowledgment

Example Acknowledgment

The authors acknowledge Research Computing at the Rochester Institute of Technology for providing computational resources and support that have contributed to the research results reported in this publication.

Citation

Rochester Institute of Technology. Research Computing Services. Rochester Institute of Technology. https://doi.org/10.34788/0S3G-QD15

Bibtex:

@misc{https://doi.org/10.34788/0s3g-qd15,

doi = {10.34788/0S3G-QD15},

url = {https://www.rit.edu/researchcomputing/},

author = {Rochester Institute of Technology},

title = {Research Computing Services},

publisher = {Rochester Institute of Technology},

year = {<YEAR_OF_SUPPORT>}

}

RIS:

T1 - Research Computing Services

AU - Rochester Institute of Technology

DO - 10.34788/0S3G-QD15

UR - https://www.rit.edu/researchcomputing/

AB - RIT Research Computing supplies advanced research technology resources and support to researchers in their quest to discover.

PY - <YEAR_OF_SUPPORT>

PB - Rochester Institute of Technology

ER -

Contact Us

If you are an RIT researcher who has utlized Research Computing services in pursuit of a publication, please fill out this survey to tell us about your publication so we can add it to this site. Thank you and congratulations!

If you notice an error on this site, please submit a ticket or contact us on Slack.

Journal Papers

[J-2024-08] Mathews, Nate and Holland, James K and Hopper, Nicholas and Wright, Matthew. (2024). “LASERBEAK: Evolving Website Fingerprinting Attacks with Attention and Multi-Channel Feature Representation” Transactions on Information Forensics and Security.

Abstract: In this paper, we present Laserbeak, a new state-of-the-art website fingerprinting attack for Tor that achieves nearly 96% accuracy against FRONT-defended traffic by combining two innovations: 1) multi-channel traffic representations and 2) advanced techniques adapted from state-of-the-art computer vision models. Our work is the first to explore a range of different ways to represent traffic data for a classifier. We find a multi-channel input format that provides richer contextual information, enabling the model to learn robust representations even in the presence of heavy traffic obfuscation. We are also the first to examine how recent advances in transformer models can take advantage of these representations. Our novel model architecture utilizing multi-headed attention layers enhances the capture of both local and global patterns. By combining these innovations, Laserbeak demonstrates absolute performance improvements of up to 36.2% (e.g., from 27.6% to 63.8%) compared with prior attacks against defended traffic. Experiments highlight Laserbeak’s capabilities in multiple scenarios, including a large open-world dataset where it achieves over 80% recall at 99% precision on traffic obfuscated with padding defenses. These advances reduce the remaining anonymity in Tor against fingerprinting threats, underscoring the need for stronger defenses.

Publisher Site: IEEE Xplore

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{mathews2024laserbeak,

title = {LASERBEAK: Evolving Website Fingerprinting Attacks with Attention and Multi-Channel Feature Representation},

author = {Mathews, Nate and Holland, James K and Hopper, Nicholas and Wright, Matthew},

journal = {IEEE Transactions on Information Forensics and Security},

year = {2024},

publisher = {IEEE}

}

[J-2024-07] Kasarabada, Viswateja and Nasir Ahamed, Nuzhet Nihaar and Vaghef-Koodehi, Alaleh and Martinez-Martinez, Gabriela and Lapizco-Encinas, Blanca H. (2024). “Separating the Living from the Dead: An Electrophoretic Approach.” Analytical Chemistry.

Abstract: Cell viability studies are essential in numerous applications, including drug development, clinical analysis, bioanalytical assessments, food safety, and environmental monitoring. Microfluidic electrokinetic (EK) devices have been proven to be effective platforms to discriminate microorganisms by their viability status. Two decades ago, live and dead Escherichia coli (E. coli) cells were trapped at distinct locations in an insulator-based EK (iEK) device with cylindrical insulating posts. At that time, the discrimination between live and dead cells was attributed to dielectrophoretic effects. This study presents the continuous separation between the live and dead E. coli cells, which was achieved primarily by combining linear and nonlinear electrophoretic effects in an iEK device. First, live and dead E. coli cells were characterized in terms of their electrophoretic migration, and then the properties of both live and dead E. coli cells were input into a mathematical model built using COMSOL Multiphysics software to identify appropriate voltages for performing an iEK separation in a T-cross iEK channel. Subsequently, live and dead cells were successfully separated experimentally in the form of an electropherogram, achieving a separation resolution of 1.87. This study demonstrated that linear and nonlinear electrophoresis phenomena are responsible for the discrimination between live and dead cells under DC electric fields in iEK devices. Continuous electrophoretic assessments, such as the one presented here, can be used to discriminate between distinct types of microorganisms including live and dead cell assessments.

Publisher Site: ACS

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{kasarabada2024separating,

title = {Separating the Living from the Dead: An Electrophoretic Approach},

author = {Kasarabada, Viswateja and Nasir Ahamed, Nuzhet Nihaar and Vaghef-Koodehi, Alaleh and Martinez-Martinez, Gabriela and Lapizco-Encinas, Blanca H},

journal = {Analytical Chemistry},

year = {2024},

publisher = {ACS Publications}

}

[J-2024-06] Uche, Obioma U. (2024). “A DFT Analysis of the Cuboctahedral to Icosahedral Transformation of Gold-Silver Nanoparticles.” Computational Materials Science.

Abstract: In the current work, we investigate the transformation mechanics of gold-silver nanoparticles with cuboctahedral and icosahedral geometries by varying relevant attributes including size, composition, morphology, and chemical order. Our findings reveal that the transformation occurs via a martensitic, symmetric mechanism, irrespective of the specific attributes for all nanoparticles under consideration. The associated transformation barriers are observed to be strongly dependent on both size and composition as the activation energies increase with higher silver content. The chemical order is also a significant factor for determining how readily the transformation occurs since core–shell nanoparticles with gold exteriors display higher barriers in comparison to their silver counterparts. Likewise, for a given composition, core–shell morphologies indicate reduced ease of transformation relative to alloy nanoparticles.

Publisher Site: ScienceDirect

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{uche2024dft,

title = {A DFT analysis of the cuboctahedral to icosahedral transformation of gold-silver nanoparticles},

author = {Uche, Obioma U},

journal = {Computational Materials Science},

volume = {244},

pages = {113262},

year = {2024},

publisher = {Elsevier}

}

[J-2024-05] Mathew, Roshan and Dannels, Wendy A. (2024). “Transforming Language Access for Deaf Patients in Healthcare.” The Journal on Technology and Persons with Disabilities.

Abstract: Deaf patients often depend on sign language interpreters and real-time captioners for communication during healthcare appointments. While they prefer in-person services, the scarcity of local qualified professionals and the faster turnaround times of remote options like Video Remote Interpreting (VRI) and remote captioning often lead hospitals and clinics to choose these alternatives. These remote methods often cause visibility and cognitive challenges for deaf patients, as they are forced to split their focus between the VRI or captioning screen and the healthcare provider, hindering their comprehension of medical information. This study proposes the use of augmented reality (AR) smart glasses as an alternative to traditional VRI and remote captioning methods. Certified healthcare interpreters and captioners are key stakeholders in improving communication for deaf patients in medical settings. Therefore, this study explores the perspectives of 25 interpreters and 22 captioners regarding adopting an AR smart glasses application to facilitate language accessibility in healthcare contexts. The findings indicate that interpreters prefer in-person services but recognize the advantages of using a smart glasses application when in-person services are not feasible. In contrast, captioners show a strong inclination toward the smart glasses application over traditional captioning techniques.

Publisher Site: Calstate

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{mathew2024transforming,

title = {Transforming Language Access for Deaf Patients in Healthcare},

author = {Mathew, Roshan and Dannels, Wendy A},

journal = {The Journal on Technology and Persons with Disabilities},

pages = {277},

year = {2024}

}

[J-2024-04] Nasir Ahamed, Nuzhet Nihaar and Mendiola-Escobedo, Carlos A. and Perez-Gonzalez, Victor H. and Lapizco-Encinas, Blanca H. (2024). “Development of a DC-Biased AC-Stimulated Microfluidic Device for the Electrokinetic Separation of Bacterial and Yeast Cells.” Biosensors.

Abstract: Electrokinetic (EK) microsystems, which are capable of performing separations without the need for labeling analytes, are a rapidly growing area in microfluidics. The present work demonstrated three distinct binary microbial separations, computationally modeled and experimentally performed, in an insulator-based EK (iEK) system stimulated by DC-biased AC potentials. The separations had an increasing order of difficulty. First, a separation between cells of two distinct domains (Escherichia coli and Saccharomyces cerevisiae) was demonstrated. The second separation was for cells from the same domain but different species (Bacillus subtilis and Bacillus cereus). The last separation included cells from two closely related microbial strains of the same domain and the same species (two distinct S. cerevisiae strains). For each separation, a novel computational model, employing a continuous spatial and temporal function for predicting the particle velocity, was used to predict the retention time (tR,p) of each cell type, which aided the experimentation. All three cases resulted in separation resolution values Rs>1.5, indicating complete separation between the two cell species, with good reproducibility between the experimental repetitions (deviations < 6%) and good agreement (deviations < 18%) between the predicted tR,p and experimental (tR,e) retention time values. This study demonstrated the potential of DC-biased AC iEK systems for performing challenging microbial separations.

Publisher Site: MDPI

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{nasir2024development,

title = {Development of a DC-Biased AC-Stimulated Microfluidic Device for the Electrokinetic Separation of Bacterial and Yeast Cells},

author = {Nasir Ahamed, Nuzhet Nihaar and Mendiola-Escobedo, Carlos A and Perez-Gonzalez, Victor H and Lapizco-Encinas, Blanca H},

journal = {Biosensors},

volume = {14},

number = {5},

pages = {237},

year = {2024},

publisher = {MDPI}

}

[J-2024-03] Chowdhury, Md Towhidul Absar and Contractor, Maheen Riaz and Rivero, Carlos R. (2024). “Flexible Control Flow Graph Alignment for Delivering Data-Driven Feedback to Novice Programming Learners.” Journal of Systems and Software.

Abstract: Supporting learners in introductory programming assignments at scale is a necessity. This support includes automated feedback on what learners did incorrectly. Existing approaches cast the problem as automatically repairing learners' incorrect programs extrapolating the data from an existing correct program from other learners. However, such approaches are limited because they only compare programs with similar control flow and order of statements. A potentially valuable set of repair feedback from flexible comparisons is thus missing. In this paper, we present several modifications to CLARA, a data-driven automated repair approach that is open source, to deal with real-world introductory programs. We extend CLARA's abstract syntax tree processor to handle common introductory programming constructs. Additionally, we propose a flexible alignment algorithm over control flow graphs where we enrich nodes with semantic annotations extracted from programs using operations and calls. Using this alignment, we modify an incorrect program's control flow graph to match correct programs to apply CLARA's original repair process. We evaluate our approach against a baseline on the twenty most popular programming problems in Codeforces. Our results indicate that flexible alignment has a significantly higher percentage of successful repairs at 46% compared to 5% for baseline CLARA. Our implementation is available at https://github.com/towhidabsar/clara

Publisher Site: ScienceDirect

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{chowdhury2024flexible,

title = {Flexible control flow graph alignment for delivering data-driven feedback to novice programming learners},

author = {Chowdhury, Md Towhidul Absar and Contractor, Maheen Riaz and Rivero, Carlos R},

journal = {Journal of Systems and Software},

volume = {210},

pages = {111960},

year = {2024},

publisher = {Elsevier}

}

[J-2024-02] Ko, Eunmi. (2024). “An Affine Term Structure Model with Fed Chairs’ Speeches.” Finance Research Letters.

Abstract: I analyze the impact of the sentiment expressed in speeches by Fed chairs on Treasury bond yields, using an affine term structure model. The speeches by Fed chairs are numerically evaluated to generate three sentiment factors: negative, neutral, and positive. The variance decomposition analysis indicates that sentiment factors account for a considerable portion of the variance in yield forecasts.

Publisher Site: ScienceDirect

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{ko2024affine,

title = {An affine term structure model with fed chairs' speeches},

author = {Ko, Eunmi},

journal = {Finance Research Letters},

pages = {105336},

year = {2024},

publisher = {Elsevier}

}

[J-2024-01] James, Winston and Perez-Raya, Isaac. (2024). “3D Simulations of Nucleate Boiling with Sharp Interface Vof and Localized Adaptive Mesh Refinement in Ansys-Fluent.” Heat and Mass Transfer.

Abstract: The present work demonstrates the use of customized Ansys-Fluent in performing 3D numerical simulations of nucleate boiling with a sharp interface and adaptive mesh refinement. The developed simulation approach is a reliable and effective tool to investigate 3D boiling phenomena by accurately capturing the thermal and fluid dynamic interfacial vapor-liquid interaction and reducing computational time. These methods account for 3D sharp interface and thermal conditions of saturation temperature refining the mesh around the bubble edge. User-Defined-Functions (UDFs) were developed to customize the software Ansys-Fluent to preserve the interface sharpness, maintain saturation temperature conditions, and perform effective adaptive mesh refinement in a localized region around the interface. Adaptive mesh refinement is accomplished by a UDF that identifies the cells near the contact line and the liquid-vapor interface and applies the adaptive mesh refinement algorithms only at the identified cells. Validating the approach considered spherical bubble growth with an observed acceptable difference between theoretical and simulation bubble growth rates of 10%. Bubble growth simulations with water reveal an influence region of 2.7 times the departure bubble diameter, and average heat transfer coefficient of 15000 W/m2-K. In addition, the results indicate a reduced computational time of 75 hours using adaptive mesh compared to uniform mesh.

Publisher Site: ASME Digital Collection

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{james20243d,

title = {3D Simulations of Nucleate Boiling with Sharp Interface Vof and Localized Adaptive Mesh Refinement in Ansys-Fluent},

author = {James, Winston and Perez-Raya, Isaac},

journal = {ASME Journal of Heat and Mass Transfer},

pages = {1--47},

year = {2024}

}

[J-2023-12] Grant, Michael J. and Fingler, Brennan J. and Buchanan, Natalie and Padmanabhan, Poornima. (2023). “Coil—Helix Block Copolymers Can Exhibit Divergent Thermodynamics in the Disordered Phase.” Chemical Theory and Computation.

Abstract: Chiral building blocks have the ability to self-assemble and transfer chirality to larger hierarchical length scales, which can be leveraged for the development of novel nanomaterials. Chiral block copolymers, where one block is made completely chiral, are prime candidates for studying this phenomenon, but fundamental questions regarding the self-assembly are still unanswered. For one, experimental studies using different chemistries have shown unexplained diverging shifts in the order—disorder transition temperature. In this study, particle-based molecular simulations of chiral block copolymers in the disordered melt were performed to uncover the thermodynamic behavior of these systems. A wide range of helical models were selected, and several free energy calculations were performed. Specifically, we aimed to understand (1) the thermodynamic impact of changing the conformation of one block in chemically identical block copolymers and (2) the effect of the conformation on the Flory—Huggins interaction parameter, χ, when chemical disparity was introduced. We found that the effective block repulsion exhibits diverging behavior, depending on the specific conformational details of the helical block. Commonly used conformational metrics for flexible or stiff block copolymers do not capture the effective block repulsion because helical blocks are semiflexible and aspherical. Instead, pitch can quantitatively capture the effective block repulsion. Quite remarkably, the shift in χ for chemically dissimilar block copolymers can switch sign with small changes in the pitch of the helix.

Publisher Site: ACS Publications

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{grant2023coil,

title = {Coil--Helix Block Copolymers Can Exhibit Divergent Thermodynamics in the Disordered Phase},

author = {Grant, Michael J and Fingler, Brennan J and Buchanan, Natalie and Padmanabhan, Poornima},

journal = {Journal of Chemical Theory and Computation},

year = {2023},

publisher = {ACS Publications}

}

[J-2023-11] Ebmeyer, William and Dholabhai, Pratik P. (2023). “High-Throughput Prediction of Oxygen Vacancy Defect Migration Near Misfit Dislocations in SrTiO3/BaZrO3 Heterostructures.” Materials Advances.

Abstract: Among their numerous technological applications, semi-coherent oxide heterostructures have emerged as promising candidates for applications in intermediate temperature solid oxide fuel cell electrolytes, wherein interfaces influence ionic transport. Since misfit dislocations impact ionic transport in these materials, oxygen vacancy formation and migration at misfit dislocations in oxide heterostructures is central to its performance as an ionic conductor. Herein, we report High-throughput atomistic simulations to predict thousands of activation energy barriers for oxygen vacancy migration at misfit dislocations in SrTiO3/BaZrO3 heterostructures. Dopants display a noticeable effect as higher activation energies are uncovered in their vicinity. Interface layer chemistry has a fundamental influence on the magnitude of activation energy barriers since they are dissimilar at misfit dislocations as compared to coherent terraces. Lower activation energies are uncovered when oxygen vacancies migrate toward misfit dislocations, but higher energies when they hop away, revealing that oxygen vacancies would get trapped at misfit dislocations and impact ionic transport. Results herein offer atomic scale insights into ionic transport at misfit dislocations and fundamental factors governing ionic conductivity of thin film oxide electrolytes.

Publisher Site: RSC.org

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{ebmeyer2023high,

title = {High-throughput prediction of oxygen vacancy defect migration near misfit dislocations in SrTiO3/BaZrO3 heterostructures},

author = {Ebmeyer, William and Dholabhai, Pratik P},

journal = {Materials Advances},

year = {2023},

publisher = {Royal Society of Chemistry}

}

[J-2023-10] Apergi, Lida A. and Bjarnadóttir, Margrét Vilborg and Baras, John S. and Golden, Bruce L. (2023). “Cost Patterns of Multiple Chronic Conditions: A Novel Modeling Approach Using a Condition Hierarchy.” INFORMS Journal on Data Science.

Abstract: Healthcare cost predictions are widely used throughout the healthcare system. However, predicting these costs is complex because of both uncertainty and the complex interactions of multiple chronic diseases: chronic disease treatment decisions related to one condition are impacted by the presence of the other conditions. We propose a novel modeling approach inspired by backward elimination, designed to minimize information loss. Our approach is based on a cost hierarchy: the cost of each condition is modeled as a function of the number of other, more expensive chronic conditions the individual member has. Using this approach, we estimate the additive cost of chronic diseases and study their cost patterns. Using large-scale claims data collected from 2007 to 2012, we identify members that suffer from one or more chronic conditions and estimate their total 2012 healthcare expenditures. We apply regression analysis and clustering to characterize the cost patterns of 69 chronic conditions. We observe that the estimated cost of some conditions (for example, organic brain problem) decreases as the member's number of more expensive chronic conditions increases. Other conditions, such as obesity and paralysis, demonstrate the opposite pattern; their contribution to the overall cost increases as the member's number of other more serious chronic conditions increases. The modeling framework allows us to account for the complex interactions of multimorbidity and healthcare costs and, therefore, offers a deeper and more nuanced understanding of the cost burden of chronic conditions, which can be utilized by practitioners and policy makers to plan, design better intervention, and identify subpopulations that require additional resources. More broadly, our hierarchical model approach captures complex interactions and can be applied to improve decision making when the enumeration of all possible factor combinations is not possible, for example, in financial risk scoring and pay structure design.

Publisher Site: INFORMS

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{apergi2023cost,

title = {Cost Patterns of Multiple Chronic Conditions: A Novel Modeling Approach Using a Condition Hierarchy},

author = {Apergi, Lida Anna and Bjarnad{\'o}ttir, Margr{\'e}t Vilborg and Baras, John S and Golden, Bruce L},

journal = {INFORMS Journal on Data Science},

year = {2023},

publisher = {INFORMS}

}

[J-2023-09] Rajendran, Madhusudan and Ferran, Maureen C. and Mouli, Leora and Babbitt, Gregory A. and Lynch, Miranda L. (2023). “Evolution of Drug Resistance Drives Destabilization of Flap Region Dynamics in HIV-1 Protease.” Biophysical Reports.

Abstract: The HIV-1 protease is one of several common key targets of combination drug therapies for human immunodeficiency virus infection and acquired immunodeficiency syndrome (HIV/AIDS). During the progression of the disease, some individual patients acquire drug resistance due to mutational hotspots on the viral proteins targeted by combination drug therapies. It has recently been discovered that drug-resistant mutations accumulate on the ‘flap region' of the HIV-1 protease, which is a critical dynamic region involved in non-specific polypeptide binding during invasion and infection of the host cell. In this study, we utilize machine learning assisted comparative molecular dynamics, conducted at single amino acid site resolution, to investigate the dynamic changes that occur during functional dimerization, and drug-binding of wild-type and common drug-resistant versions of the main protease. We also use a multi-agent machine learning model to identify conserved dynamics of the HIV-1 main protease that are preserved across simian and feline protease orthologs (SIV and FIV). We find that a key conserved functional site in the flap region, a solvent-exposed isoleucine (Ile50) that controls flap dynamics is functionally targeted by drug-resistance mutations, leading to amplified molecular dynamics affecting the functional ability of the flap region to hold the drugs. We conclude that better long term patient outcomes may be achieved by designing drugs that target protease regions which are less dependent upon single sites with large functional binding effects.

Publisher Site: ScienceDirect

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{rajendran2023evolution,

title = {Evolution of drug resistance drives destabilization of flap region dynamics in HIV-1 protease},

author = {Rajendran, Madhusudan and Ferran, Maureen C and Mouli, Leora and Babbitt, Gregory A and Lynch, Miranda L},

journal = {Biophysical Reports},

year = {2023},

publisher = {Elsevier}

}

[J-2023-08] Marzano, Chloe and Dholabhai, Pratik P. (2023). “High-Throughput Prediction of Thermodynamic Stabilities of Dopant-Defect Clusters at Misfit Dislocations in Perovskite Oxide Heterostructures.” Journal of Physical Chemistry C.

Abstract: Complex oxide heterostructures and thin films have emerged as promising candidates for diverse applications, wherein interfaces formed by joining two different oxides play a central role in novel properties that are not present in the individual components. Lattice mismatch between the two oxides leads to the formation of misfit dislocations, which often influence vital material properties. In oxides, doping is used as a strategy to improve properties, wherein inclusion of aliovalent dopants leads to formation of oxygen vacancy defects. At low temperatures, these dopants and defects often form stable clusters. In semicoherent perovskite oxide heterostructures, the stability of such clusters at misfit dislocations, while not well understood, is anticipated to impact interface-governed properties. Herein, we report atomistic simulations elucidating the influence of misfit dislocations on the stability of dopant-defect clusters in SrTiO3/BaZrO3 heterostructures. SrO–BaO, SrO-ZrO2, BaO-TiO2, and ZrO2-TiO2 interfaces having dissimilar misfit dislocation structures were considered. High-throughput computing was implemented to predict the thermodynamic stabilities of 275,610 dopant-defect clusters in the vicinity of misfit dislocations. The misfit dislocation structure of the given interface and corresponding atomic layer chemistry play a fundamental role in influencing the thermodynamic stability of geometrically diverse clusters. A stark difference in cluster stability is observed at misfit dislocation lines and intersections as compared to the coherent terraces. These results offer an atomic scale perspective of the complex interplay between dopants, point defects, and extended defects, which is necessary to comprehend the functionalities of the perovskite oxide heterostructures.

Publisher Site: ACS Publications

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{marzano2023high,

title = {High-Throughput Prediction of Thermodynamic Stabilities of Dopant-Defect Clusters at Misfit Dislocations in Perovskite Oxide Heterostructures},

author = {Marzano, Chloe and Dholabhai, Pratik P},

journal = {The Journal of Physical Chemistry C},

year = {2023},

publisher = {ACS Publications}

}

[J-2023-07] Lyu, Zimeng and Ororbia, Alexander and Desell, Travis. (2023). “Online Evolutionary Neural Architecture Search for Multivariate Non-Stationary Time Series Forecasting.” Applied Soft Computing.

Abstract: Time series forecasting (TSF) is one of the most important tasks in data science. TSF models are usually pre-trained with historical data and then applied on future unseen datapoints. However, real-world time series data is usually non-stationary and models trained offline usually face problems from data drift. Models trained and designed in an offline fashion can not quickly adapt to changes quickly or be deployed in real-time. To address these issues, this work presents the Online NeuroEvolution-based Neural Architecture Search (ONE-NAS) algorithm, which is a novel neural architecture search method capable of automatically designing and dynamically training recurrent neural networks (RNNs) for online forecasting tasks. Without any pre-training, ONE-NAS utilizes populations of RNNs that are continuously updated with new network structures and weights in response to new multivariate input data. ONE-NAS is tested on real-world, large-scale multivariate wind turbine data as well as the univariate Dow Jones Industrial Average (DJIA) dataset. Results demonstrate that ONE-NAS outperforms traditional statistical time series forecasting methods, including online linear regression, fixed long short-term memory (LSTM) and gated recurrent unit (GRU) models trained online, as well as state-of-the-art, online ARIMA strategies. Additionally, results show that utilizing multiple populations of RNNs which are periodically repopulated provide significant performance improvements, allowing this online neural network architecture design and training to be successful.

Publisher Site: ScienceDirect

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{lyu2023online,

title = {Online evolutionary neural architecture search for multivariate non-stationary time series forecasting},

author = {Lyu, Zimeng and Ororbia, Alexander and Desell, Travis},

journal = {Applied Soft Computing},

year = {2023},

publisher = {Elsevier}

}

[J-2023-06] Keithley, Kimberlee S.M. and Palmerio, Jacob and Escobedo IV, Hector A. and Bartlett, Jordyn and Huang, Henry and Villasmil, Larry A. and Cromer, Michael. (2023). “Role of Shear Thinning in the Flow of Polymer Solutions Around a Sharp Bend.” Rheologica Acta.

Abstract: In flows with re-entrant corners, polymeric fluids can exhibit a recirculation region along the wall upstream from the corner. In general, the formation of these vortices is controlled by both the extensional and shear rheology of the material. More importantly, these regions can only form for sufficiently elastic fluids and are often called "lip vortices". These elastic lip vortices have been observed in the flows of complex fluids in geometries with sharp bends. In this work, we characterize the roles played by elasticity and shear thinning in the formation of the lip vortices. Simulations of the Newtonian, Bird-Carreau, and Oldroyd-B models reveal that elasticity is a necessary element. A systematic study of the White-Metzner, finitely extensible non-linear elastic (FENE-P), Giesekus and Rolie-Poly models shows that the onset and size of the elastic lip vortex is governed by a combination of both the degree of shear thinning and the critical shear rate at which the thinning begins.

Publisher Site: SpringerLink

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{keithley2023role,

title = {Role of shear thinning in the flow of polymer solutions around a sharp bend},

author = {Keithley, Kimberlee SM and Palmerio, Jacob and Escobedo IV, Hector A and Bartlett, Jordyn and Huang, Henry and Villasmil, Larry A and Cromer, Michael},

journal = {Rheologica Acta},

pages = {1--15},

year = {2023},

publisher = {Springer}

}

[J-2023-05] Nur, Nayma Binte and Bachmann, Charles M. (2023). “Comparison of Soil Moisture Content Retrieval Models Utilizing Hyperspectral Goniometer Data and Hyperspectral Imagery from an Unmanned Aerial System.” Journal of Geophysical Research.

Abstract: To understand surface biogeophysical processes, accurately evaluating the geographical and temporal fluctuations of soil moisture is crucial. It is well known that the surface soil moisture content (SMC) affects soil reflectance at all solar spectrum wavelengths. Therefore, future satellite missions, such as the NASA Surface Biology and Geology (SBG) mission, will be essential for mapping and monitoring global soil moisture changes. Our study compares two widely used moisture retrieval models: the multilayer radiative transfer model of soil reflectance (MARMIT) and the soil water parametric (SWAP)-Hapke model. We evaluated the SMC retrieval accuracy of these models using unmanned aerial systems (UAS) hyperspectral imagery and goniometer hyperspectral data. Laboratory analysis employed hyperspectral goniometer data of sediment samples from four locations reflecting diverse environments, while field validation used hyperspectral UAS imaging and coordinated ground truth collected in 2018 and 2019 from a barrier island beach at the Virginia Coast Reserve Long-Term Ecological Research site. The (SWAP)-Hapke model achieves comparable accuracy to MARMIT using laboratory hyperspectral data but is less accurate when applied to UAS hyperspectral imagery than the MARMIT model. We proposed a modified version of the (SWAP)-Hapke model, which achieves better results than MARMIT when applied to laboratory spectral measurements; however, MARMIT performance is still more accurate when applied to UAS imagery. These results are likely due to differences in the models' descriptions of multiply-scattered light and MARMIT's more detailed description of air-water interactions.

Publisher Site: Wiley

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{nur2023comparison,

title = {Comparison of Soil Moisture Content Retrieval Models Utilizing Hyperspectral Goniometer Data and Hyperspectral Imagery from an Unmanned Aerial System},

author = {Nur, Nayma Binte and Bachmann, Charles M},

journal = {Journal of Geophysical Research: Biogeosciences},

year = {2023},

publisher = {Wiley Online Library}

}

[J-2023-04] Olatunji, Isaac and Cui, Feng. (2023). “Multimodal AI for Prediction of Distant Metastasis in Carcinoma Patients.” Frontiers in Bioinformatics.

Abstract: Metastasis of cancer is directly related to death in almost all cases, however a lot is yet to be understood about this process. Despite advancements in the available radiological investigation techniques, not all cases of Distant Metastasis (DM) are diagnosed at initial clinical presentation. Also, there are currently no standard biomarkers of metastasis. Early, accurate diagnosis of DM is however crucial for clinical decision making, and planning of appropriate management strategies. Previous works have achieved little success in attempts to predict DM from either clinical, genomic, radiology, or histopathology data. In this work we attempt a multimodal approach to predict the presence of DM in cancer patients by combining gene expression data, clinical data and histopathology images. We tested a novel combination of Random Forest (RF) algorithm with an optimization technique for gene selection, and investigated if gene expression pattern in the primary tissues of three cancer types (Bladder Carcinoma, Pancreatic Adenocarcinoma, and Head and Neck Squamous Carcinoma) with DM are similar or different. Gene expression biomarkers of DM identified by our proposed method outperformed Differentially Expressed Genes (DEGs) identified by the DESeq2 software package in the task of predicting presence or absence of DM. Genes involved in DM tend to be more cancer type specific rather than general across all cancers. Our results also indicate that multimodal data is more predictive of metastasis than either of the three unimodal data tested, and genomic data provides the highest contribution by a wide margin. The results re-emphasize the importance for availability of sufficient image data when a weakly supervised training technique is used. Code is made available at: https://github.com/rit-cuilab/Multimodal-AI-for-Prediction-of-Distant-Metastasis-in-Carcinoma-Patients.

Publisher Site: PubMed Central

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{olatunji2023multimodal,

title = {Multimodal AI for prediction of distant metastasis in carcinoma patients},

author = {Olatunji, Isaac and Cui, Feng},

journal = {Frontiers in Bioinformatics},

volume = {3},

year = {2023},

publisher = {Frontiers Media SA}

}

[J-2023-03] Childs, Megan Rowan and Wong, Tony E. (2023). “Assessing Parameter Sensitivity in a University Campus COVID-19 Model with Vaccination.” Journal of Infectious Disease Modelling.

Abstract: From the beginning of the COVID-19 pandemic, universities have experienced unique challenges due to their dual nature as a place of education and residence. Current research has explored non-pharmaceutical approaches to combating COVID-19, including representing in models different categories such as age groups. One key area not currently well represented in models is the effect of pharmaceutical preventative measures, specifically vaccinations, on COVID-19 spread on college campuses. There remain key questions on the sensitivity of COVID-19 infection rates on college campuses to potentially time-varying vaccine immunity. Here we introduce a compartment model that decomposes a campus population into constituent subpopulations and implements vaccinations with time-varying efficacy. We use this model to represent a campus population with both vaccinated and unvaccinated individuals, and we analyze this model using two metrics of interest: maximum isolation population and symptomatic infection. We demonstrate a decrease in symptomatic infections occurs for vaccinated individuals when the frequency of testing for unvaccinated individuals is increased. We find that the number of symptomatic infections is insensitive to the frequency of testing of the unvaccinated subpopulation once about 80% or more of the population is vaccinated. Through a Sobol' global sensitivity analysis, we characterize the sensitivity of modeled infection rates to these uncertain parameters. We find that in order to manage symptomatic infections and the maximum isolation population campuses must minimize contact between infected and uninfected individuals, promote high vaccine protection at the beginning of the semester, and minimize the number of individuals developing symptoms.

Publisher Site: ScienceDirect

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{childs2023assessing,

title = {Assessing parameter sensitivity in a university campus COVID-19 model with vaccinations},

author = {Childs, Meghan Rowan and Wong, Tony E},

journal = {Infectious Disease Modelling},

year = {2023},

publisher = {Elsevier}

}

[J-2023-02] Bergstrom, Austin C. and Messinger, David W. (2023). “Image Quality and Computer Vision Performance: Assessing the Effects of Image Distortions and Modeling Performance Relationships Using the General Image Quality Equation.” Journal of Electronic Imaging.

Abstract: Substantial research has explored methods to optimize convolutional neural networks (CNNs) for tasks such as image classification and object detection, but research into the image quality drivers of computer vision performance has been limited. Additionally, there are indications that image degradations such as blur and noise affect human visual interpretation and machine interpretation differently. The general image quality equation (GIQE) predicts overhead image quality for human analysis using the National Image Interpretability Rating Scale, but no such model exists to predict image quality for interpretation by CNNs. Here, we assess the relationship between image quality variables and CNN performance. Specifically, we examine the impacts of resolution, blur, and noise on CNN performance for models trained with in-distribution and out-of-distribution distortions. Using two datasets, we observe that while generalization remains a significant challenge for CNNs faced with out-of-distribution image distortions, CNN performance against low visual quality images remains strong with appropriate training, indicating the potential to expand the design trade space for sensors providing data to computer vision systems. Additionally, we find that CNN performance predictions using the functional form of the GIQE can predict CNN performance as a function of image degradation, but we observe that the legacy form of the GIQE (from GIQE versions 3 and 4) does a better job of modeling the impact of blur/relative edge response in our experiments.

Publishier Site: SPIE

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{bergstrom2023image,

title = {Image quality and computer vision performance: assessing the effects of image distortions and modeling performance relationships using the general image quality equation},

author = {Bergstrom, Austin C and Messinger, David W},

journal = {Journal of Electronic Imaging},

volume = {32},

number = {2},

pages = {023018},

year = {2023},

publisher = {SPIE}

}

[J-2023-01] Shipkowski, S.P. and Perez-Reya, I. (2023). “Precise and Analytical Calculation of Interface Surface Area in Sharp Interfaces and Multiphase Modeling.” International Journal of Heat and Mass Transfer.

Abstract: Various kinds of Piecewise Linear Interface Calculations (PLIC) are commonly used in Computational Fluid Dynamics (CFD) for multiphase flows. Here, a new method is proposed to calculate the interface size for CFD simulations. This PLIC method with the modifier, Analytic Size Based [method], (PLIC-ASB), being that the calculation requires a symbolic equation and no approximation or numerical methods. The primary advantage of the PLIC-ASB is maintaining a sharp interface and providing for physics-based (not iterative or empirically derived factors) mass transfer due to the cell-by-cell accuracy. Along with the sharp interface, this method leads to precise interface displacements and suppresses parasitic velocities; altogether, this means an accurate model of the temperature distribution near the interface can be achieved. The PLIC-ASB method was compared directly against the VOF gradient method (most prevalent PLIC in multiphase simulations). Static testing for accuracy on various geometric shapes expressed as the color function on representative grids of cells. The PLIC-ASB had a better result than the VOF gradient method, with an average relative error of 3% and 13%, respectively. Spherical bubble growth simulations were performed with customized ANSYS-Fluent. Both interface calculation methods were tested. Foremost, the PLIC-ASB method produced experimentally and theoretically anticipated results. Parasitic velocities were problematic in the VOF gradient method simulations but only minor with the use of PLIC-ASB. Further, a trend of accuracy improving with reduced cell size was found with the PLIC-ASB, whereas no trend was present with the VOF-gradient method. The PLIC-ASB method has the characteristic limitations of any PLIC method, most notably requiring a straight-line approximation to suitably represent the multiphase interface; however, the straightforward implementation and accuracy make broad adoption reasonable.

Publisher Site: ScienceDirect

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{shipkowski2023precise,

title = {Precise and analytical calculation of interface surface area in sharp interfaces and multiphase modeling},

author = {Shipkowski, SP and Perez-Raya, I},

journal = {International Journal of Heat and Mass Transfer},

volume = {202},

pages = {123683},

year = {2023},

publisher = {Elsevier}

}

[J-2022-14] Rajendran, Madhusudan and Babbitt, Gregory A. (2022). “Persistent Cross-Species SARS-CoV-2 Variant Infectivity Predicted via Comparative Molecular Dynamics Simulation.” Royal Society of Open Science.

Abstract: Widespread human transmission of SARS-CoV-2 highlights the substantial public health, economic and societal consequences of virus spillover from wildlife and also presents a repeated risk of reverse spillovers back to naive wildlife populations. We employ comparative statistical analyses of a large set of short-term molecular dynamic (MD) simulations to investigate the potential human-to-bat (genus Rhinolophus) cross-species infectivity allowed by the binding of SARS-CoV-2 receptor-binding domain (RBD) to angiotensin-converting enzyme 2 (ACE2) across the bat progenitor strain and emerging human strain variants of concern (VOC). We statistically compare the dampening of atom motion across protein sites upon the formation of the RBD/ACE2 binding interface using various bat versus human target receptors (i.e. bACE2 and hACE2). We report that while the bat progenitor viral strain RaTG13 shows some pre-adaption binding to hACE2, it also exhibits stronger affinity to bACE2. While early emergent human strains and later VOCs exhibit robust binding to both hACE2 and bACE2, the delta and omicron variants exhibit evolutionary adaption of binding to hACE2. However, we conclude there is a still significant risk of mammalian cross-species infectivity of human VOCs during upcoming waves of infection as COVID-19 transitions from a pandemic to endemic status.

Publisher Site: The Royal Society

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{rajendran2022persistent,

title = {Persistent cross-species SARS-CoV-2 variant infectivity predicted via comparative molecular dynamics simulation},

author = {Rajendran, Madhusudan and Babbitt, Gregory A},

journal = {Royal Society Open Science},

volume = {9},

number = {11},

pages = {220600},

year = {2022},

publisher = {The Royal Society}

}

[J-2022-13] Medlar, Michael and Hensel, Edward. (2022). “Transient 3-D Thermal Simulation of a Fin-FET Transistor with Electron-Phonon Heat Generation, Three Phonon Scattering, and Drift with Periodic Switching.” Journal of Heat Transfer.

Abstract: The International Roadmap for Devices and Systems shows FinFET array transistors as the mainstream structure for logic devices. Traditional methods of Fourier heat transfer analysis that are typical of Technology Computer Aided Design (TCAD) software are invalid at the length and time scales associated with these devices. Traditional models for phonon transport modeling have not demonstrated the ability to accurately model 3-d, transient transistor thermal responses. An engineering design tool is needed to accurately predict the thermal response of FinFET transistor arrays. The Statistical Phonon Transport Model (SPTM) was applied in a 3-d, transient manner to predict non-equilibrium phonon transport in an SOI-FinFET array transistor with a 60 nm long fin and a 20 nm channel length. A heat generation profile from electron-phonon scattering was applied in a transient manner to model switching. Simulation results indicated an excess build-up of up to 17% optical phonons giving rise to transient local temperature hot spots of 37 Kelvin in the drain region. The local build-up of excess optical phonons in the drain region has implications on performance and reliability. The SPTM is a valid engineering design tool for evaluating the thermal performance of emergent proposed FinFET transistor designs. The phonon fidelity of the SPTM is greater than Monte Carlo and the Boltzmann Transport Equation and the length scale and time scale fidelity of the SPTM is better than Direct Atomic Simulation.

Publisher Site: ASME Digital Collection

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{medlar2022transient,

title = {Transient 3-D Thermal Simulation of a Fin-FET Transistor with Electron-Phonon Heat Generation, Three Phonon Scattering, and Drift with Periodic Switching},

ISSN = {1528-8943},

url = {http://dx.doi.org/10.1115/1.4056002},

DOI = {10.1115/1.4056002},

journal = {Journal of Heat Transfer},

publisher = {ASME International},

author = {Medlar, Michael and Hensel, Edward},

year = {2022},

month = {Oct}

}

[J-2022-12] Uche, Obioma U. and Sidorick, Micah. (2022). “A Comparative Analysis of the Vibrational and Structural Properties of Nearly Incommensurate Overlayer Systems.” Surfance Science.

Abstract: Using molecular dynamics simulations based on embedded atom method potentials, we have investigated the vibrational properties of the following three systems of nearly hexagonal noble metal monolayers adsorbed on substrates of square symmetry: Ag on Cu(001), Ag on Ni(001) and Au on Ni(001). The corresponding local phonon density of states, mean square amplitudes, and substrate interlayer relaxation of the above systems are compared to identify similarities and differences. A key finding is that the Ag monolayers have a stronger influence on both phonon spectra and vibrational amplitudes of the topmost layer of the Cu(001) surface than that of the nickel substrates. Furthermore, the vibrational amplitudes of the overlayer atoms are shown to be anisotropic and dominant along the incommensurate direction for the three systems. Temperature effects on the vibrational and structural properties are also discussed.

Publisher Site: ScienceDirect

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{uche2022comparative,

title = {A comparative analysis of the vibrational and structural properties of nearly incommensurate overlayer systems},

author = {Uche, Obioma U and Sidorick, Micah},

journal = {Surface Science},

volume = {717},

pages = {121989},

year = {2022},

publisher = {Elsevier}

}

[J-2022-11] Sankaran, Prashant and McConky, Katie and Sudit, Moises and Ortiz-Peña, Héctor. (2022). “GAMMA: Graph Attention Model for Multiple Agents to Solve Team Orienteering Problem With Multiple Depots.” IEEE Transactions on Neural Networks and Learning Systems.

Abstract: In this work, we present an attention-based encoder-decoder model to approximately solve the team orienteering problem with multiple depots (TOPMD). The TOPMD instance is an NP-hard combinatorial optimization problem that involves multiple agents (or autonomous vehicles) and not purely Euclidean (straight line distance) graph edge weights. In addition, to avoid tedious computations on dataset creation, we provide an approach to generate synthetic data on the fly for effectively training the model. Furthermore, to evaluate our proposed model, we conduct two experimental studies on the multi-agent reconnaissance mission planning problem formulated as TOPMD. First, we characterize the model based on the training configurations to understand the scalability of the proposed approach to unseen configurations. Second, we evaluate the solution quality of the model against several baselines-heuristics, competing machine learning (ML), and exact approaches, on several reconnaissance scenarios. The experimental results indicate that training the model with a maximum number of agents, a moderate number of targets (or nodes to visit), and moderate travel length, performs well across a variety of conditions. Furthermore, the results also reveal that the proposed approach offers a more tractable and higher quality (or competitive) solution in comparison with existing attention-based models, stochastic heuristic approach, and standard mixed-integer programming solver under the given experimental conditions. Finally, the different experimental evaluations reveal that the proposed data generation approach for training the model is highly effective.

Publisher Site: IEEE Xplore

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{sankaran2022gamma,

title = {GAMMA: Graph Attention Model for Multiple Agents to Solve Team Orienteering Problem With Multiple Depots},

author = {Sankaran, Prashant and McConky, Katie and Sudit, Moises and Ortiz-Pe{\~n}a, H{\'e}ctor},

journal = {IEEE Transactions on Neural Networks and Learning Systems},

year = {2022},

publisher = {IEEE}

}

[J-2022-10] Rajendran, Madhusudan and Ferran, Maureen C. and Babbitt, Gregory A. (2022). “Identifying Vaccine Escape Sites via Statistical Comparisons of Short-term Molecular Dynamics.” Biophysical Reports.

Abstract: The identification of viral mutations that confer escape from antibodies is crucial for understanding the interplay between immunity and viral evolution. We describe a molecular dynamics (MD) based approach that goes beyond contact mapping, scales well to a desktop computer with a modern graphics processor, and enables the user to identify functional protein sites that are prone to vaccine escape in a viral antigen. We first implement our MD pipeline to employ site-wise calculation of Kullback-Leibler divergence in atom fluctuation over replicate sets of short-term MD production runs thus enabling a statistical comparison of the rapid motion of influenza hemagglutinin (HA) in both the presence and absence of three well-known neutralizing antibodies. Using this simple comparative method applied to motions of viral proteins, we successfully identified in silico all previously empirically confirmed sites of escape in influenza HA, predetermined via selection experiments and neutralization assays. Upon the validation of our computational approach, we then surveyed potential hot spot residues in the receptor binding domain of the SARS-CoV-2 virus in the presence of COVOX-222 and S2H97 antibodies. We identified many single sites in the antigen-antibody interface that are similarly prone to potential antibody escape and that match many of the known sites of mutations arising in the SARS-CoV-2 variants of concern. In the omicron variant, we find only minimal adaptive evolutionary shifts in the functional binding profiles of both antibodies. In summary, we provide an inexpensive and accurate computational method to monitor hot spots of functional evolution in antibody binding footprints.

Publisher Site: ScienceDirect

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{rajendran2022identifying,

title = {Identifying vaccine escape sites via statistical comparisons of short-term molecular dynamics},

author = {Rajendran, Madhusudan and Ferran, Maureen C and Babbitt, Gregory A},

journal = {Biophysical reports},

volume = {2},

number = {2},

pages = {100056},

year = {2022},

publisher = {Elsevier}

}

[J-2022-09] Peters, Jared I. and Tang, Lisa and Uche, Obioma U. (2022). “Influence of Size and Composition on the Transformation Mechanics of Gold-Silver Core-Shell Nanoparticles.” The Journal of Physical Chemistry C.

Abstract: Bimetallic nanoparticles occupy a unique space in the field of materials science as they display physical and optoelectronic properties that are absent in their monometallic counterparts. These attributes increase their applicability in niche processes where they can perform distinct functions from those achievable with the corresponding pure-element particles. In the current work, we explore the feasibility of performing structural characterization of gold-silver core-shell nanoclusters as well as analyze the transformation between cuboctahedral and icosahedral geometries using a combination of atomistic simulations and electronic structure calculations. We find that size may be a limiting factor in distinguishing between the above two geometries when theoretical vibrational densities of states are employed in characterizing the nanoparticles under consideration. The results from our density functional theory calculations also reveal that the transformation between cuboctahedral and icosahedral geometries occurs via a martensitic, symmetric mechanism for the 147-atom and 309-atom nanoclusters. In addition, the associated transformation barriers for the bimetallic core-shell particles are strongly size-dependent and typically increase with the composition of silver in the nanocluster.

Publisher Site: ACS Publications

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{peters2022influence,

title = {Influence of Size and Composition on the Transformation Mechanics of Gold--Silver Core--Shell Nanoparticles},

author = {Peters, Jared I and Tang, Lisa and Uche, Obioma U},

journal = {The Journal of Physical Chemistry C},

volume = {126},

number = {15},

pages = {6612--6618},

year = {2022},

publisher = {ACS Publications}

}

[J-2022-08] Meyers, Benjamin S. and Almassari, Sultan Fahad and Keller, Brandon N. and Meneely, Andrew. (2022). “Examining Penetration Tester Behavior in the Collegiate Penetration Testing Competition.” ACM Transactions on Software Engineering and Methodology (TOSEM).

Abstract: Penetration testing is a key practice toward engineering secure software. Malicious actors have many tactics at their disposal, and software engineers need to know what tactics attackers will prioritize in the first few hours of an attack. Projects like MITRE ATT&CK(TM) provide knowledge, but how do people actually deploy this knowledge in real situations? A penetration testing competition provides a realistic, controlled environment with which to measure and compare the efficacy of attackers. In this work, we examine the details of vulnerability discovery and attacker behavior with the goal of improving existing vulnerability assessment processes using data from the 2019 Collegiate Penetration Testing Competition (CPTC). We constructed 98 timelines of vulnerability discovery and exploits for 37 unique vulnerabilities discovered by 10 teams of penetration testers. We grouped related vulnerabilities together by mapping to Common Weakness Enumerations and MITRE ATT&CK(TM). We found that (1) vulnerabilities related to improper resource control (e.g., session fixation) are discovered faster and more often, as well as exploited faster, than vulnerabilities related to improper access control (e.g., weak password requirements), (2) there is a clear process followed by penetration testers of discovery/collection to lateral movement/pre-attack. Our methodology facilitates quicker analysis of vulnerabilities in future CPTC events.

Publisher Site: ACM Digital Library

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{meyers2022examining,

title = {Examining penetration tester behavior in the collegiate penetration testing competition},

author = {Meyers, Benjamin S and Almassari, Sultan Fahad and Keller, Brandon N and Meneely, Andrew},

journal = {ACM Transactions on Software Engineering and Methodology (TOSEM)},

volume = {31},

number = {3},

pages = {1--25},

year = {2022},

publisher = {ACM New York, NY}

}

[J-2022-07] Kothari, Rakshit S. and Bailey, Reynold J. and Kanan, Christopher and Pelz, Jeff B. and Diaz, Gabriel J. (2022). “EllSeg-Gen, Towards Domain Generalization for Head-mounted Eyetracking.” Proceedings of the ACM on Human-Computer Interaction.

Abstract: The study of human gaze behavior in natural contexts requires algorithms for gaze estimation that are robust to a wide range of imaging conditions. However, algorithms often fail to identify features such as the iris and pupil centroid in the presence of reflective artifacts and occlusions. Previous work has shown that convolutional networks excel at extracting gaze features despite the presence of such artifacts. However, these networks often perform poorly on data unseen during training. This work follows the intuition that jointly training a convolutional network with multiple datasets learns a generalized representation of eye parts. We compare the performance of a single model trained with multiple datasets against a pool of models trained on individual datasets. Results indicate that models tested on datasets in which eye images exhibit higher appearance variability benefit from multiset training. In contrast, dataset-specific models generalize better onto eye images with lower appearance variability.

Publisher Site: ACM Digital Library

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{kothari2022ellseg,

title = {EllSeg-Gen, towards Domain Generalization for head-mounted eyetracking},

author = {Kothari, Rakshit S and Bailey, Reynold J and Kanan, Christopher and Pelz, Jeff B and Diaz, Gabriel J},

journal = {Proceedings of the ACM on Human-Computer Interaction},

volume = {6},

number = {ETRA},

pages = {1--17},

year = {2022},

publisher = {ACM New York, NY, USA}

}

[J-2022-06] Grant, Michael and Kunz, M. Ross and Iyer, Krithika and Held, Leander I. and Tasdizen, Tolga and Aguiar, Jeffery A. and Dholabhai, Pratik P. (2022). “Integrating Atomistic Simulations and Machine Learning to Design Multi-principal Element Alloys with Superior Elastic Modulus.” Journal of Materials Research.

Abstract: Multi-principal element, high entropy alloys (HEAs) are an emerging class of materials that have found applications across the board. Owing to the multitude of possible candidate alloys, exploration and compositional design of HEAs for targeted applications is challenging since it necessitates a rational approach to identify compositions exhibiting enriched performance. Here, we report an innovative framework that integrates molecular dynamics and machine learning to explore a large chemical-configurational space for evaluating elastic modulus of equiatomic and non-equiatomic HEAs along primary crystallographic directions. Vital thermodynamic properties and machine learning features have been incorporated to establish fundamental relationships correlating Young's modulus with Gibbs free energy, valence electron concentration, and atomic size difference. In HEAs, as the number of elements increases, interactions between the elastic modulus values and features become increasingly nested, but tractable as long as non-linearity is accounted. Basic design principles are offered to predict HEAs with enhanced mechanical attributes.

Publisher Site: SpringerLink

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{grant2022integrating,

title = {Integrating atomistic simulations and machine learning to design multi-principal element alloys with superior elastic modulus},

author = {Grant, Michael and Kunz, M Ross and Iyer, Krithika and Held, Leander I and Tasdizen, Tolga and Aguiar, Jeffery A and Dholabhai, Pratik P},

journal = {Journal of Materials Research},

volume = {37},

number = {8},

pages = {1497--1512},

year = {2022},

publisher = {Springer}

}

[J-2022-05] Dholabhai, Pratik P. (2022). “Oxygen Vacancy Formation and Interface Charge Transfer at Misfit Dislocations in Gd-Doped CeO2/MgO Heterostructures.” The Journal of Physical Chemistry C.

Abstract: Among numerous functionalities of mismatched complex oxide thin films and heterostructures, their application as next-generation electrolytes in solid oxide fuel cells has shown remarkable promise. In thin-film oxide electrolytes, although misfit dislocations ubiquitous at interfaces play a critical role in ionic transport, fundamental understanding of their influence on oxygen vacancy formation and passage is nevertheless lacking. Herein, we report first-principles density functional theory calculations to elucidate the atomic and electronic structures of misfit dislocations in the CeO 2 /MgO heterostructure for the experimentally observed epitaxial relationship. Thermodynamic stability of the structure corroborates recent results demonstrating that the 45 degree rotation of the CeO 2 thin film eliminates the surface dipole, resulting in experimentally observed epitaxy. The energetics and electronic structures of oxygen vacancy formation near gadolinium dopants at misfit dislocations are evaluated, which demonstrate complex tendencies as compared to the grain interior and surfaces of ceria. The interface charge transfer mechanism is studied for defect-free and defective interfaces. Since the atomic and electronic structures of misfit dislocations at complex oxide interfaces and their influence on interface charge transfer and oxygen vacancy defect formation have not been studied in the past, this work offers new opportunities to unravel the untapped potential of oxide heterostructures.

Publisher Site: ACS Publications

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{dholabhai2022oxygen,

title = {Oxygen Vacancy Formation and Interface Charge Transfer at Misfit Dislocations in Gd-Doped CeO2/MgO Heterostructures},

author = {Dholabhai, Pratik P},

journal = {The Journal of Physical Chemistry C},

volume = {126},

number = {28},

pages = {11735--11750},

year = {2022},

publisher = {ACS Publications}

}

[J-2022-04] Chaudhary, Aayush K. and Nair, Nitinraj and Bailey, Reynold J. and Pelz, Jeff B. and Talathi, Sachin S. and Diaz, Gabriel J. (2022). “Temporal RIT-Eyes: From Real Infrared Eye-Images to Synthetic Sequences of Gaze Behavior.” IEEE Transactions on Visualization and Computer Graphics.

Abstract: Current methods for segmenting eye imagery into skin, sclera, pupil, and iris cannot leverage information about eye motion. This is because the datasets on which models are trained are limited to temporally non-contiguous frames. We present Temporal RIT-Eyes , a Blender pipeline that draws data from real eye videos for the rendering of synthetic imagery depicting natural gaze dynamics. These sequences are accompanied by ground-truth segmentation maps that may be used for training image-segmentation networks. Temporal RIT-Eyes relies on a novel method for the extraction of 3D eyelid pose (top and bottom apex of eyelids/eyeball boundary) from raw eye images for the rendering of gaze-dependent eyelid pose and blink behavior. The pipeline is parameterized to vary in appearance, eye/head/camera/illuminant geometry, and environment settings (indoor/outdoor). We present two open-source datasets of synthetic eye imagery: sGiW is a set of synthetic-image sequences whose dynamics are modeled on those of the Gaze in Wild dataset, and sOpenEDS2 is a series of temporally non-contiguous eye images that approximate the OpenEDS-2019 dataset. We also analyze and demonstrate the quality of the rendered dataset qualitatively and show significant overlap between latent-space representations of the source and the rendered datasets.

Publisher Site: IEEE Xplore

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{chaudhary2022temporal,

title = {Temporal RIT-Eyes: From Real Infrared Eye-Images to Synthetic Sequences of Gaze Behavior},

author = {Chaudhary, Aayush K. and Nair, Nitinraj and Bailey, Reynold J. and Pelz, Jeff B. and Talathi, Sachin S. and Diaz, Gabriel J.},

journal = {IEEE Transactions on Visualization and Computer Graphics},

volume = {},

number = {},

pages = {1-11},

year = {2022},

publisher = {IEEE}

doi = {10.1109/TVCG.2022.3203100}

}

[J-2022-03] Butler, Victoria L. and Feder, Richard M. and Daylan, Tansu and Mantz, Adam B. and Mercado, Dale and Montaña, Alfredo and Portillo, Stephen K.N. and Sayers, Jack and Vaughan, Benjamin J. and Zemcov, Michael and Others. (2022). “Measurement of the Relativistic Sunyaev-Zeldovich Correction in RX J1347.5-1145.” The Astrophysical Journal.

Abstract: We present a measurement of the relativistic corrections to the thermal Sunyaev-Zel'dovich (SZ) effect spectrum, the rSZ effect, toward the massive galaxy cluster RX J1347.5-1145 by combining submillimeter images from Herschel-SPIRE with millimeter wavelength Bolocam maps. Our analysis simultaneously models the SZ effect signal, the population of cosmic infrared background galaxies, and the galactic cirrus dust emission in a manner that fully accounts for their spatial and frequency-dependent correlations. Gravitational lensing of background galaxies by RX J1347.5-1145 is included in our methodology based on a mass model derived from the Hubble Space Telescope observations. Utilizing a set of realistic mock observations, we employ a forward modeling approach that accounts for the non-Gaussian covariances between the observed astrophysical components to determine the posterior distribution of SZ effect brightness values consistent with the observed data. We determine a maximum a posteriori (MAP) value of the average Comptonization parameter of the intracluster medium (ICM) within R_2500 to be <y>_2500 = 1.56 x 10-4, with corresponding 68% credible interval [1.42, 1.63] x 10-4, and a MAP ICM electron temperature of <T_sz>_2500 = 22.4 keV with 68% credible interval spanning [10.4, 33.0] keV. This is in good agreement with the pressure-weighted temperature obtained from Chandra X-ray observations, <T_x,pw>_2500 = 17.4 +/- 2.3 keV. We aim to apply this methodology to comparable existing data for a sample of 39 galaxy clusters, with an estimated uncertainty on the ensemble mean <T_sz>_2500 at the ~= 1 keV level, sufficiently precise to probe ICM physics and to inform X-ray temperature calibration.

Publisher Site: IOP Science

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{butler2022measurement,

title = {Measurement of the Relativistic Sunyaev--Zeldovich Correction in RX J1347. 5-1145},

author = {Butler, Victoria L and Feder, Richard M and Daylan, Tansu and Mantz, Adam B and Mercado, Dale and Monta{\~n}a, Alfredo and Portillo, Stephen KN and Sayers, Jack and Vaughan, Benjamin J and Zemcov, Michael and others},

journal = {The Astrophysical Journal},

volume = {932},

number = {1},

pages = {55},

year = {2022},

publisher = {IOP Publishing}

}

[J-2022-02] Buchanan, Natalie and Provenzano, Joules and Padmanabhan, Poornima. (2022). “A Tunable, Particle-Based Model for the Diverse Conformations Exhibited by Chiral Homopolymers.” Macromolecules.

Abstract: Chiral block copolymers capable of hierarchical self-assembly can also exhibit chirality transfer the transfer of chirality at the monomer or conformational scale to the self-assembly. Prior studies focused on experimental and theoretical methods that are unable to fully decouple the thermodynamic origins of chirality transfer and necessitate the development of particle-based models that can be used to quantify intrachain, interchain, and entropic contributions. With this goal in mind, in this work, we developed a parametrized coarse-grained model of a chiral homopolymer and extensively characterized the resulting conformations. Specifically, the energetic parameter in the angular and dihedral potentials, the angular set point, and the dihedral set point are systematically varied to produce a wide range of conformations from a random coil to a nearly ideal helix. The average helicity, pitch, persistence length, and end-to-end distance are measured, and correlations between the model parameters and resulting conformations are obtained. Using available experimental data on model polypeptoid-based chiral polymers, we back out the required parameters that produce similar pitch and persistence length ratios reported in the experiments. The conformations for the experimentally matched chains appear to be somewhat flexible, exhibiting some helical turns. Our model is versatile and can be used to perform molecular dynamics simulations of chiral block copolymers and even sequence-specific polypeptides to study their self-assembly and to gain thermodynamic insights.

Publisher Site: ACS Publications

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{buchanan2022tunable,

title = {A Tunable, Particle-Based Model for the Diverse Conformations Exhibited by Chiral Homopolymers},

author = {Buchanan, Natalie and Provenzano, Joules and Padmanabhan, Poornima},

journal = {Macromolecules},

year = {2022},

publisher = {ACS Publications}

}

[J-2022-01] Brakensiek, Joshua and Heule, Marijn and Mackey, John and Narváez, David. (2022). “The Resolution of Keller’s Conjecture.” Journal of Automated Reasoning.

Abstract: We consider three graphs, G7,3, G7,4, and G7,6, related to Keller's conjecture in dimension 7. The conjecture is false for this dimension if and only if at least one of the graphs contains a clique of size 2 7 = 128. We present an automated method to solve this conjecture by encoding the existence of such a clique as a propositional formula. We apply satisfiability solving combined with symmetry-breaking techniques to determine that no such clique exists. This result implies that every unit cube tiling of R7 contains a facesharing pair of cubes. Since a faceshare-free unit cube tiling of R8 exists (which we also verify), this completely resolves Keller's conjecture.

Publisher Site: SpringerLink

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{brakensiek2022resolution,

title = {The resolution of Keller's conjecture},

author = {Brakensiek, Joshua and Heule, Marijn and Mackey, John and Narv{\'a}ez, David},

journal = {Journal of Automated Reasoning},

pages = {1--24},

year = {2022},

publisher = {Springer}

}

[J-2021-09] Buchanan, Natalie and Browka, Krysia and Ketcham, Lianna and Le, Hillary and Padmanabhan, Poornima. (2021). “Conformational and Topological Correlations in Non-Frustated Triblock Copolymers with Homopolymers.” Soft Matter.

Abstract: The phase behavior of non-frustrated ABC block copolymers polymers, modeling poly(isoprene-b-styrene-b-ethylene oxide) (ISO), is studied using dissipative particle dynamic (DPD) simulations. The phase diagram showed a wide composition range for the alternating gyroid morphology, which can be transformed to a chiral metamaterial. A quantitative analysis of topology was developed, that correlates the location of a block relative to the interface with the block's end-to-end distance. This analysis showed that the A-blocks stretched as they were located deeper in the A-rich region. To further expand the stability of the alternating gyroid phase, A-selective homopolymers of different lengths were co-assembled with the ABC copolymer at several compositions. Topological analysis showed that homopolymers with lengths shorter than or equal to the A-block length filled the middle of the networks, decreasing packing frustration and stabilizing them, while longer homopolymers stretched across the network but allowed for the formation of stable, novel morphologies. Adding homopolymers to triblock copolymer melts increases tunability of the network, offering greater control over the final stable phase and bridging two separate regions in the phase diagram.

Publisher Site: RSC.org

PDF (Requires Sign-In): PDF

Click for Bibtex

@article{buchanan2021conformational,

title = {Conformational and topological correlations in non-frustated triblock copolymers with homopolymers},

author = {Buchanan, Natalie and Browka, Krysia and Ketcham, Lianna and Le, Hillary and Padmanabhan, Poornima},

journal = {Soft Matter},

volume = {17},

number = {3},

pages = {758--768},

year = {2021},

publisher = {Royal Society of Chemistry}

}

[J-2021-08] Uche, Obioma U. and Le, Han G. and Brunner, Logan B. (2021). “Size-selective, Rapid Dynamics of Large, Hetero-epitaxial Islands on fcc(0 0 1) Surfaces.” Computational Materials Science.

Abstract: In this work, we use molecular dynamics simulations to investigate the diffusion of two-dimensional, hexagonal silver islands on copper and nickel substrates below room temperature. Our results indicate that certain sized islands diffuse orders of magnitude faster than other islands and even single atoms under similar conditions. An analysis of several low-energy pathways reveals two governing processes: a rapid, glide-centric process and a slower, vacancy-assisted one. The relative magnitude of the energies required to nucleate a vacancy plays a role in determining the size-selective diffusion of these islands. In addition, we propose a model which can be used to predict magic-sized islands in related systems. These findings should provide insight to future experimental research on the size distribution and shapes observed during the growth of metal-on-metal thin films.

Publisher Site: ScienceDirect